The following chapter introduces some terminology that you will encounter when working with eCognition software.

In eCognition an image layer is the most basic level of information contained in a raster image. All images contain at least one image layer.

A grayscale image is an example of an image with one layer, whereas the most common single layers are the red, green and blue (RGB) layers that go together to create a color image. In addition, image layers can contain information such as near-infrared (NIR) data contained in remote sensing images or any image layers available for analysis. Image layers can also contain a range of other information, such as geographical elevation models together with intensity data or GIS information containing metadata.

eCognition allows the import of these image raster layers. It also supports thematic raster or vector layers, which can contain qualitative and categorical information about an area (an example is a layer that acts as a mask to identify a particular region).

eCognition software handles two-dimensional images and data sets of multidimensional, visual representations:

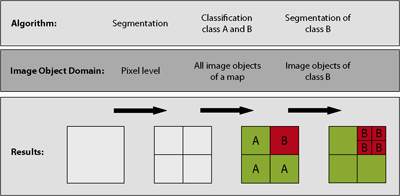

The first step of an eCognition image analysis is to cut the image into pieces, which serve as building blocks for further analysis – this step is called segmentation and there is a choice of several algorithms to do this.

The next step is to label these objects according to their attributes, such as shape, color and relative position to other objects. This is typically followed by another segmentation step to yield more functional objects. This cycle is repeated as often as necessary and the hierarchies created by these steps are described in the next section.

An image object is a group of pixels in a map. Each object represents a definite space within a scene and objects can provide information about this space. The first image objects are typically produced by an initial segmentation.

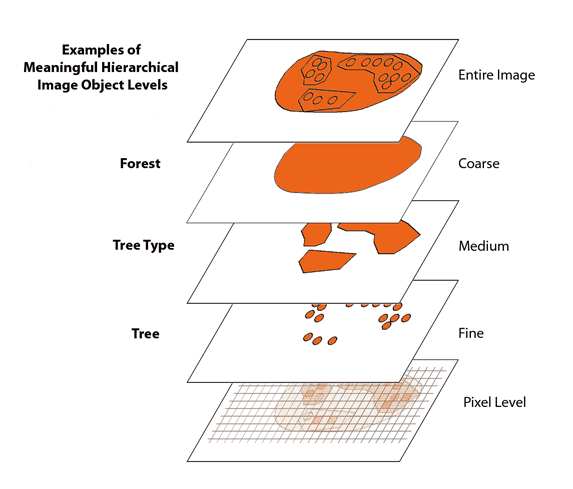

This is a data structure that incorporates image analysis results, which have been extracted from a scene. The concept is illustrated in the figure below.

It is important to distinguish between image object levels and image layers. Image layers represent data that already exists in the image when it is first imported. Image object levels store image objects, which are representative of this data.

The scene below is represented at the pixel level and is an image of a forest. Each level has a super-level above it, where multiple objects may become assigned to single classes – for example, the forest level is the super-level containing tree type groups on a level below. Again, these tree types can consist of single trees on a sub-level.

Every image object is networked in a manner that each image object knows its context – who its neighbors are, which levels and objects (superobjects) are above it and which are below it (sub-objects). No image object may have more than one superobject, but it can have multiple sub-objects.

The domain describes the scope of a process; in other words, which image objects (or pixels or vectors) an algorithm is applied to. For example, an image object domain is created when you select objects based on their size.

A segmentation-classification-segmentation cycle is illustrated in the figure below. The square is segmented into four and the regions are classified into A and B. Region B then undergoes further segmentation. The relevant image object domain is listed underneath the corresponding algorithm.

You can also define domains by their relations to image objects of parent processes, for example, sub-objects or neighboring image objects.

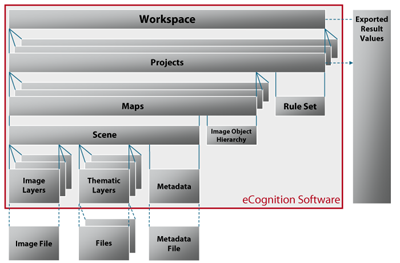

The organizational hierarchy in eCognition software is – in ascending order – scenes, maps, projects and workspaces. As this terminology is used extensively in this guide, it is important to familiarize yourself with it.

On a practical level, a scene is the most basic level in the eCognition hierarchy.

A scene is essentially a digital image along with some associated information. For example, in its most basic form, a scene could be a JPEG image from a digital camera with the associated metadata (such as size, resolution, camera model and date) that the camera software adds to the image. At the other end of the spectrum, it could be a four-dimensional medical image set, with an associated file containing a thematic layer containing histological data.

The image file and the associated data within a scene can be independent of eCognition software (although this is not always true). However, eCognition will import all of this information and associated files, which you can then save to an eCognition format; the most basic one being an eCognition project (which has a .dpr extension). A dpr file is separate to the image files and – although they are linked objects – does not alter it.

What can be slightly confusing in the beginning is that eCognition creates another hierarchical level between a scene and a project – a map. Creating a project will always create a single map by default, called the main map – visually, what is referred to as the main map is identical to the original image and cannot be deleted.

Maps only really become useful when there are more than one of them, because a single project can contain several maps. A practical example is a second map that contains a portion of the original image at a lower resolution. When the image within that map is analyzed, the analysis and information from that scene can be applied to the more detailed original.

Workspaces are at the top of the hierarchical tree and are essentially containers for projects, allowing you to bundle several of them together. They are especially useful for handling complex image analysis tasks where information needs to be shared.